Beyond Copilot: How AI Will Revolutionize the Software Development Lifecycle

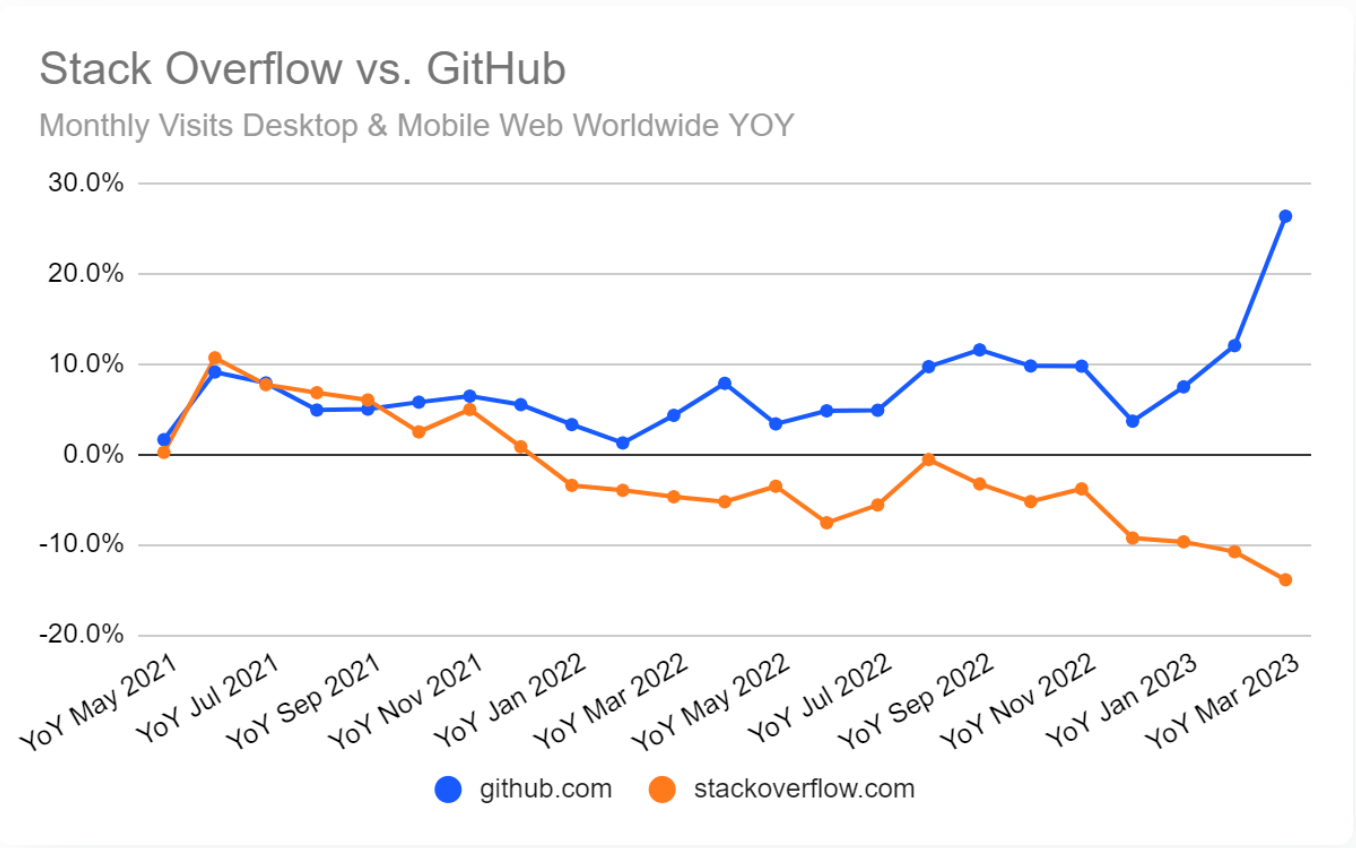

Within a year, coding assistants like GitHub Copilot went from being sci-fi fantasy to an essential tool for developer productivity. Sure, they’re not always helpful, sometimes they’re downright misleading, but they’re getting better every day and traffic to Stack Overflow has been down by an average of 6% every month since January 2022 — make of that what you will.

So how is AI being applied to other aspects of the software development lifecycle? What secret sauce is being brewing to turbocharge the engineering teams of tomorrow?

A recent paper entitled Large Language Models for Software Engineering: Survey and Open Problems provides us with a comprehensive meta-analysis of over 229 studies where LLMs are being applied to the world of software engineering. I’ll provide a quick summary of the most interesting bits, reference numbers are kept consistent with the original paper for convenience.

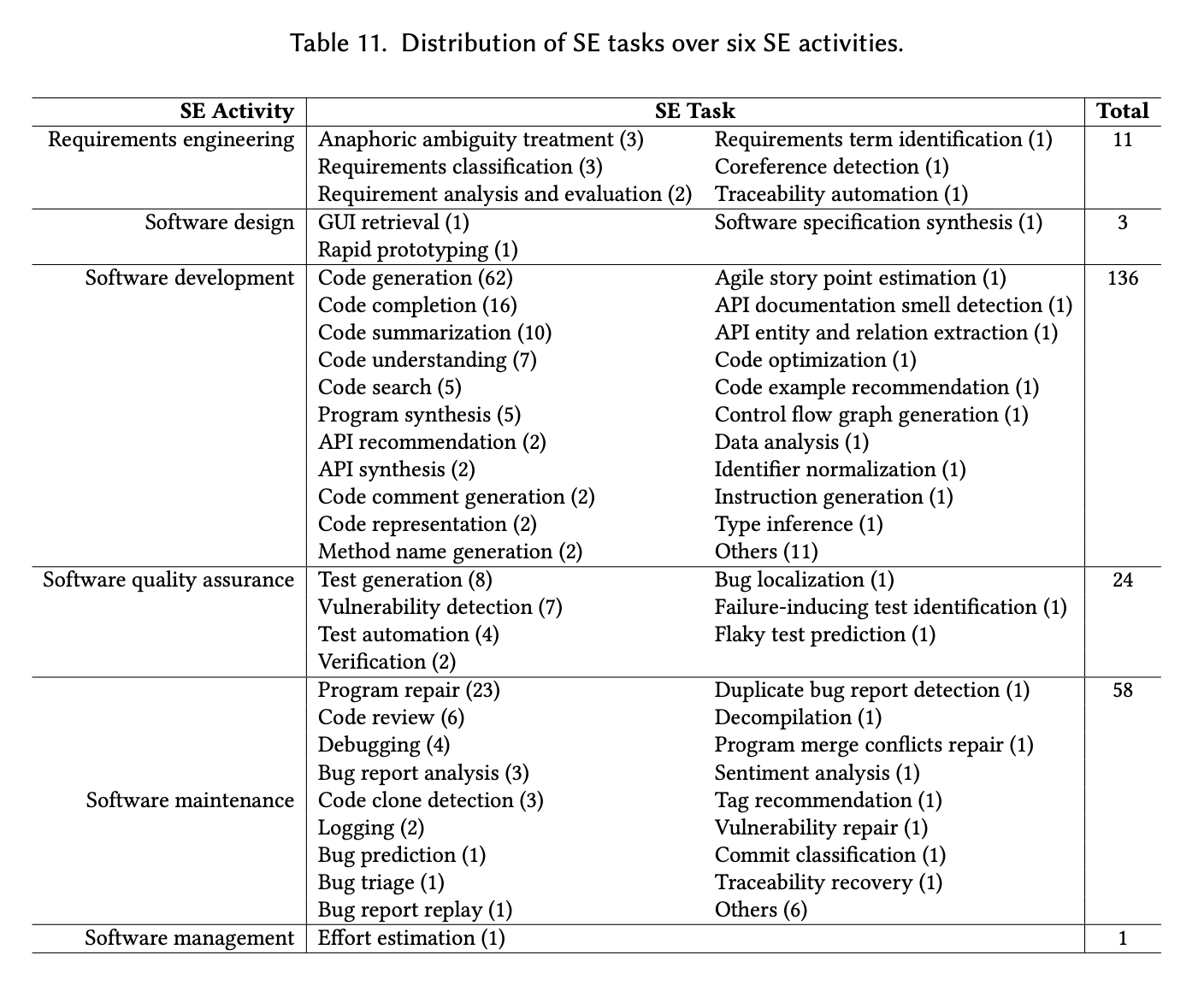

The authors break down the SDLC into 6 different categories, the table above shows the number of papers related to specific tasks within each. While not all areas of research will find their way into applications, this can give us some idea of what’s coming down the pike.

Development

This area has seen the most extensive research by far, with 136 (of 229) studies covering 21 different tasks — the majority relating to code generation.

There’s a new model every week but Codex, GPT-4, and Code Llama are most heavily studied. These have all shown impressive capabilities in generating syntactically and semantically accurate code from natural language descriptions [38, 60]. LLMs excel at code completion, providing intelligent and context-aware recommendations that improve coding efficiency through tools like Github Copilot [258]. Other applications include code summaries [4, 16], program synthesis [155], API recommendations [343], and data analysis [78].

The 2023 Stack Overflow survey asked developers which AI tools they were using, and out of the 21 options listed this year, a vast majority are just using the two most popular products: ChatGPT (83%) and GitHub Copilot (56%).

Bad news for Stack Overflow

Quality Assurance / Testing

Nearly 25 studies applied LLMs to software testing.

LLMs have been used to generate test suites while also effectively pinpointing flaky tests [72]. For verification, combining LLMs with formal methods showed promise in automated program repair [32]. Overall, LLMs demonstrate considerable capabilities for software testing, with the potential in future to reduce the cost of QA by applying agents to difficult-to-evaluate problems such as exploratory testing.

Perhaps the most specialized product on the market today for AI test generation is Codium.ai, though more general products like Cursor (which provides an IDE and relies upon GPT-4) also has this capability.

Software Maintenance

LLMs assisted diverse maintenance tasks across over 55 studies.

For bug fixing, models like Codex and ChatGPT could plausibly repair over 60% of defects in benchmarks [354, 355]. LLMs also improved code review accuracy [285, 318], optimized debugging [136], and duplicate bug detection [296]. LLMs can aid bug fixing by analyzing bug reports, creating tests that replicate the bug, and potentially also generating patches, accelerating the bug fixing process.

One thing to bear in mind when interpreting the results of these studies is that benchmark datasets are probably not representative of the type of bug reports that you might be dealing with — more on this topic in a follow up post.

Software Management

LLMs remain scarcely applied in software management, with just one study exploring effort estimation. Significant research is needed to realize LLMs’ potential in planning, costing, tracking, and governance. This is a thornier area to research because of the lack of real-world datasets. Companies like Atlassian have the right data but it remains to be seen whether they are able to innovate and deliver any meaningful product here.

Way back in 1987, Frederick P. Brooks wrote No Silver Bullet: Essence and Accident in Software Engineering, in which he argues that any significant improvement in the productivity of software engineering projects will have to come from the planning and analysis stage, and that we are already in the realms of diminishing returns when it comes to development tooling.

The hardest single part of building a software system is deciding precisely what to build. No other part of the conceptual work is so difficult as establishing the detailed technical requirements, including all the interfaces to people, to machines, and to other software systems. No other part of the work so cripples the resulting system if done wrong. No other part is more difficult to rectify later.

—

Reach Out

We’re working on unlocking some of these untapped opportunities for high performance teams. If you’re a developer or you’re managing an engineering team and you want to gain a competitive advantage, then reach out!

I’m also interested in how you would like to see AI applied within your team — whether that’s bug triage, testing, code reviews, documentation generation or something completely different. Where’s the pain? Send me a mail.

References

- [1]Fan, A., Gokkaya, B., Harman, M., Lyubarskiy, M., Sengupta, S., Yoo, S., & Zhang, J. M. (2023). Large Language Models for Software Engineering: Survey and Open Problems.

- [32] Yiannis Charalambous, Norbert Tihanyi, Ridhi Jain, Youcheng Sun, Mohamed Amine Ferrag, and Lucas C Cordeiro. 2023. A New Era in Software Security: Towards Self-Healing Software via Large Language Models and Formal Verification. arXiv preprint arXiv:2305.14752 (2023).

- [60] Yihong Dong, Xue Jiang, Zhi Jin, and Ge Li. 2023. Self-collaboration Code Generation via ChatGPT. arXiv preprint arXiv:2304.07590 (2023).

- [72] Sakina Fatima, Taher A Ghaleb, and Lionel Briand. 2022. Flakify: A black-box, language model-based predictor for flaky tests. IEEE Transactions on Software Engineering (2022).

- [78] Michael Fu and Chakkrit Tantithamthavorn. 2022. GPT2SP: A transformer-based agile story point estimation approach. IEEE Transactions on Software Engineering 49, 2 (2022), 611–625.

- [258] Rohith Pudari and Neil A Ernst. 2023. From Copilot to Pilot: Towards AI Supported Software Development. arXiv preprint arXiv:2303.04142 (2023).

- [296] Giriprasad Sridhara, Sourav Mazumdar, et al. 2023. ChatGPT: A Study on its Utility for Ubiquitous Software Engineering Tasks. arXiv preprint arXiv:2305.16837 (2023).

- [318] Rosalia Tufano, Simone Masiero, Antonio Mastropaolo, Luca Pascarella, Denys Poshyvanyk, and Gabriele Bavota. 2022. Using pre-trained models to boost code review automation. In Proceedings of the 44th International Conference on Software Engineering. 2291–2302.

- [343] Moshi Wei, Nima Shiri Harzevili, Yuchao Huang, Junjie Wang, and Song Wang. 2022. Clear: contrastive learning for API recommendation. In Proceedings of the 44th International Conference on Software Engineering. 376–387.

- [355] Chunqiu Steven Xia and Lingming Zhang. 2023. Keep the Conversation Going: Fixing 162 out of 337 bugs for 0 each using ChatGPT. arXiv preprint arXiv:2304.00385 (2023).