The Dark Side of Generative AI: Part 1 — Understanding the Security and Privacy Risks

An overview of the main security and privacy considerations developers need to consider when building products that leverage Generative AI.

Note: This is part 1 of a 3 part series that will focus on the main security and privacy aspects related to building applications that leverage Generative AI technology

The Rise in Popularity of Generative AI Models

Generative Artificial Intelligence (AI) has rapidly gained popularity and found its way into various applications, empowering products with its ability to generate realistic content such as images, videos, text, and even music. Generative AI models like DALL-E, GPT-4, and others can produce highly realistic content, enabling advancements in areas such as art, entertainment, advertisement, and content creation. These models are trained on vast amounts of data and learn to generate new content that closely resembles the patterns and styles present in the training data.

Why is it important?

While the use of generative AI, often referred to as Language and Language Models (LLM), offers exciting opportunities, it also introduces new risks and challenges. In this blog post, we will explore the security and privacy considerations associated with generative AI projects and we will discuss the implications of these risks along with potential mitigations in the following post of this series.

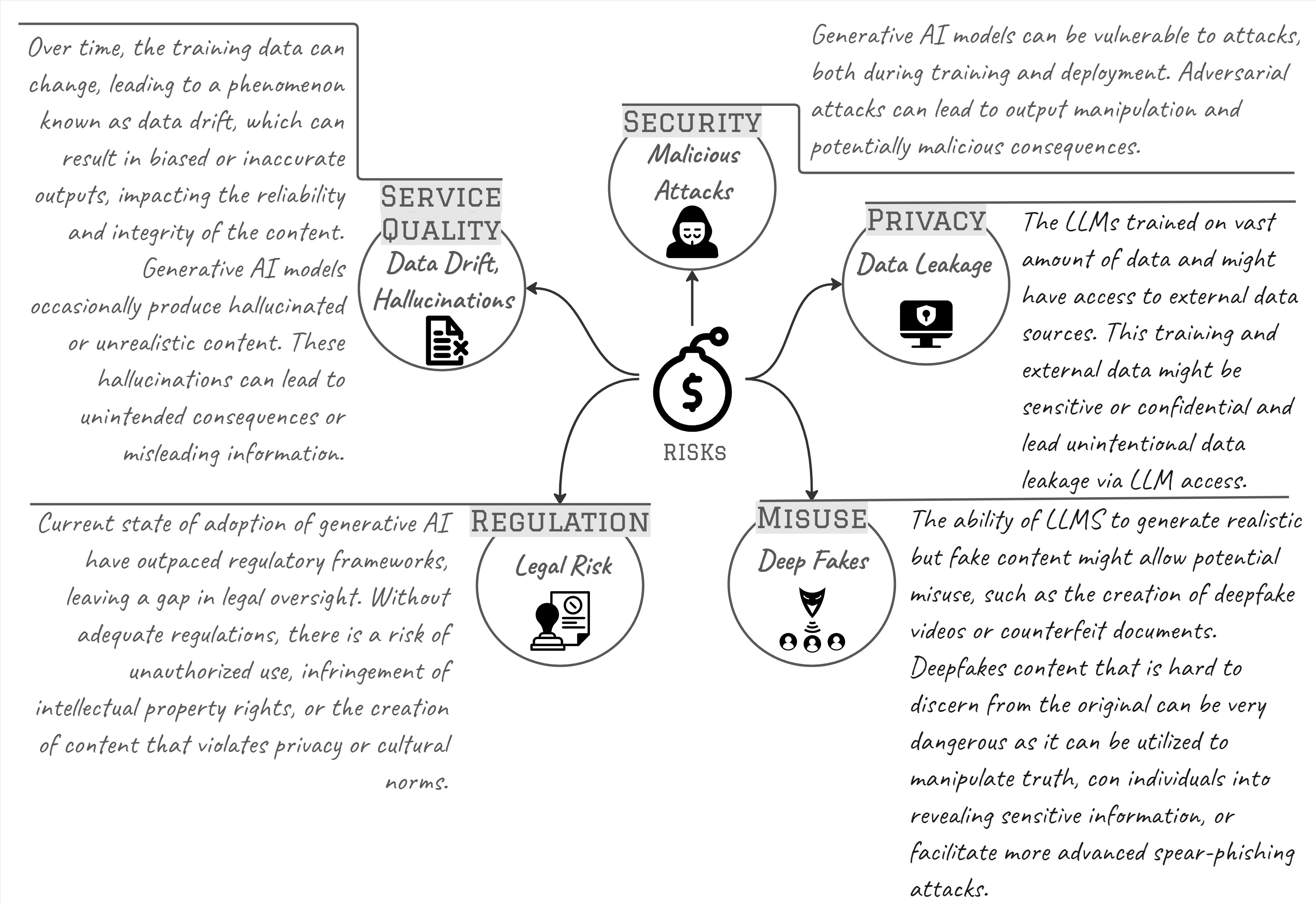

Main Risk Categories

To get a better perspective on where we need to focus, I will list the main risk categories, while I will also mention non-security risk, for completeness, but won’t get into elaborating on those in this post. These risks can have implications for businesses and individuals alike.

Who is to take responsibility for the risks?

The responsibility for the risks presented by generative AI technology must be shared between the developer of the application using the technology and the user of the application.

Developer Responsibility:

- Ethical Design of the application should take into consideration potential misuse or unintentional negative impact.

- Accuracy and Safety of the generated content is assured and the application does not pose any potential harm to users or society. Transparency and Explainability in how the generative AI technology works, making it clear to users what the limitations and potential biases.

- Security of the generative AI application, so the user data is protected and unauthorized access is prevented.

User Responsibility:

- Informed and Risk-based Trust and usage of the application so that inherent data reliability problems could not cause more than acceptable level of negative impact. Use the application only in scenarios where the risk can be managed.

- Ethical Application of the technology and avoid of using the technology for malicious purposes or spreading misinformation.

- Feedback and Reporting should be given to the developers regarding any issues, concerns or security incident while using the generative AI technology. Including misuse or unethical behavior they come across.

It is also important to note that policymakers play a crucial role in managing the risks associated with generative AI technology, by establishing forward-thinking regulations that encourage innovation while protecting society’s interests. More on that in a follow-up post.

Application Vendors / Developers Implications

Vendors must implement security programs that consider unique risk of the Generative AI technology and address them during the threat modeling, implementation and testing phases. There are few industry frameworks and tools that can be of immense value to security teams implementing such programs.

- MITRE | ATLAS™ — Enumeration of adversary tactics and techniques based on red-team demonstrations and real-world attack.

- OWASP Top 10 LLM — Educational resource for developers, designers, architects, managers, and organizations about the potential security risks when deploying and managing Large Language Models.

- AI Risk Management Framework | NIST — A framework developed in collaboration of public and private sectors, to better manage risks to individuals, organizations, and society associated with artificial intelligence (AI).

User Implications

Users of the application built with LLMs need to be well educated on the level of trust they can put into the generated content. As an example, we can look at generative AI usage to augment software development tools. It’s well-established that generative coding tools can introduce insecure code, with one Stanford study suggesting that software engineers who use code-generating AI are more likely to cause security vulnerabilities in the apps they develop. If users are well educated and take responsibility for that risk, they can greatly benefit from those coding tools, while taking care of the risk.

Call to Action

While generative AI offers immense potential, careful consideration of the security and privacy implications is crucial. By addressing these risks and ensuring ethical use, we can harness the power of generative AI to create innovative applications while safeguarding individuals’ rights and preserving the integrity of the generated content.

If you’re embarking on a new generative AI project and want to make sure you develop it responsibly and protect your users’ data, we @ Atchai will be happy discuss how we can help.

Let’s build together products that are both capable and conscientious.